Practical AI-assisted programming

I began using Cursor IDE on a consistent basis in Jan 2025, although I knew about it since 2023. I didn't realise I gained some decent exposure at applying LLMs to refine my PRs until I kept seeing posts of people getting frustrated with their lack of productivity with LLMs, relegating AI as a sham.

This is certainly untrue, although I think it's "absolutely right" to think that LLMs of today (2025) are still far away from replacing engineers. So we kind of have to realise that we're in company of a fast working engineer, and we might get to extract some juice out of it if we know what we want.

In many ways this might feel similar to pair programming with an extremely enthusiastic (not necessarily talented) junior engineer, but rather someone very cooperative & fast.

The only thing that I always had in mind while coding with AI is to feed as much info as need into its context window. More importantly, don't feed it stuff it wouldn't find necessary. "Noise" is highly detrimental to LLM outputs.

This of course requires some familiarity with the codebase but I submit to you even a high level overview is sufficient if you know what files might be necessary for the LLM in accomplishing the task at hand. (you can say something like, scan the codebase and look for the files defining the database schemas for your reference, if you yourself are new to the codebase and can't locate where they are). So if you learn nothing from this article, I want you to leave with this. In most cases, the AI IDEs' grep or semantic search will probably do the trick here.See this example, and what the agent did underneath the prompt:

Note I didn't add any files to the context, I relied on the IDE's semantic search to find the files.

look at the analytics router and see wherever it's being referenced in (analytics service file) as well as the thin wrappers from the functions exported from there in admin.service, user.service and client.service files. let me know if some thing is off that doesnt seem intentional. look up the prisma files to see if we're missing any indexes/redundant indexes for the related queries (we use mongodb).

heads up: we knowingly don't return the time in program for the clients.

look at the frontend files where these routes are being called. see if we could maybe clean them or refactor them or make em better.

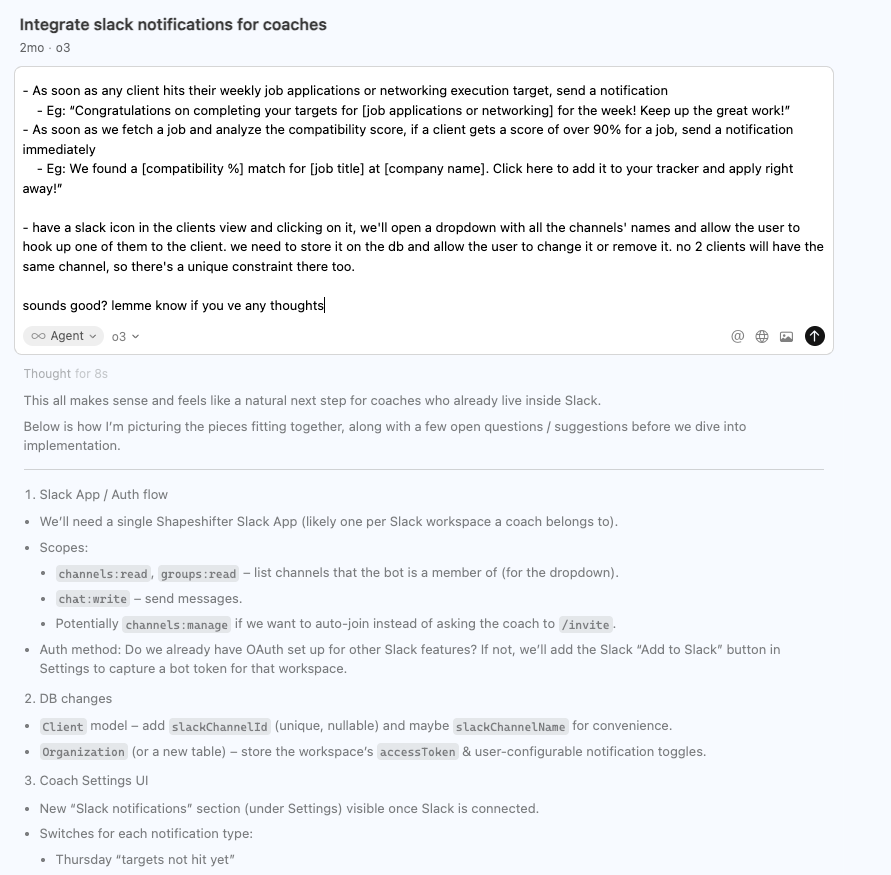

I am not showing you how to put the perfect prompt, but if you know the direction you want the LLM to go, you will end up with remarkably satisfying results either in a self PR review or building features from scratch. I also ended up using AI for stuff/features I had no idea about. For example, I wasn't familiar with Slack as I didn't use it for more than 2 months since the start of my career. And towards the end of my time in shapeshifter, I had to ship a feature where I use their Slack bot and integrate it into the application for periodic and event based messages based on some business logic.

It is evident that this is a big feature and I, by any means, don't want to one-shot it because I can't (and probably shouldn't). Just like the timeless writing advice by George RR Martin, we "write what you know" to the LLM

Here's how I started after an initial discussion in the meet, notice how I didn't add any files to the context for reference and didn't remotely expect this to be done anytime soon. (in relative terms)

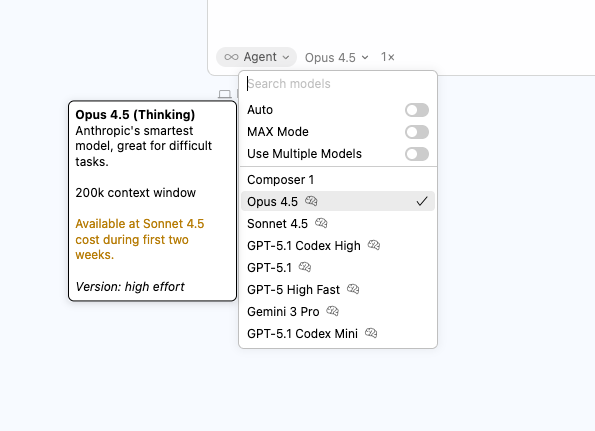

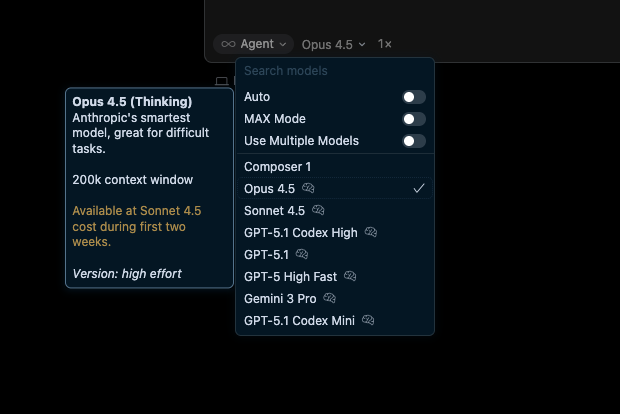

Even after all these new models, I still ❤️ openai's o3 in Cursor for brainstorming, reviewing and anything that does not involve writing and editing files (in my experience it's bad at file changes, where something like a Sonnet 4 is much more enthusiastic)

See how it starts. I'm not a pro prompt engineer, so I try to talk to the LLM like I would to another person.

Refer this for how I end it, just like a human would.

Formatted for readability:

ok we need to ship a new feature. since a lot of coaches are using our slack to communicate with their clients we should integrate our notifications with slack properly. so high level here's what i think this would look like:

- Coach will add the slackbot to the private channels one by one

Eg: /invite @shapeshifter

- In the settings page:

- * There will be Slack notifications section

- * In this section, a coach can toggle various types of notifications he wants to be sent to all the clients

- types of notifications (for now) include:

Time-based

- * Every Thursday, send a notification to all clients who are yet to hit their job application or networking execution targets for the week.

Eg: "Hey, you're just [job remaining number] job applications and [networking remaining number] networking contacts away from hitting your weekly targets! Click here to finish them!"

- * Every Sunday morning, send a notification to all clients who have their overall execution score for the week below 30%

Eg: "Oh no, you've only hit [execution score %] of your goals this week. With one day left, let's buckle up?"

Trigger-based

- * As soon as any client hits their weekly job applications or networking execution target, send a notification

Eg: "Congratulations on completing your targets for [job applications or networking] for the week! Keep up the great work!"

- * As soon as we fetch a job and analyze the compatibility score, if a client gets a score of over 90% for a job, send a notification immediately

Eg: We found a [compatibility %] match for [job title] at [company name]. Click here to add it to your tracker and apply right away!"

- * Every Thursday, send a notification to all clients who are yet to hit their job application or networking execution targets for the week.

- - have a slack icon in the clients view and clicking on it, we'll open a dropdown with all the channels' names and allow the user to hook up one of them to the client. we need to store it on the db and allow the user to change it or remove it. no 2 clients will have the same channel, so there's a unique constraint there too.

sounds good? lemme know if you've any thoughts

You don't have to wait to be as specific as me. In this case I was lucky because I had some stuff a Notion page that I was shared, but I didn't know how Slack bots are configured, and all the intricacies of the implementation when I was presented with this task.

And the beauty of o3 is that I had some really wholesome discussions with it, whereas with most other models they've been kind of yes-men (unless nudged with a pessimistic-ish prompt)

Formatted for readability:

This all makes sense and feels like a natural next step for coaches who already live inside Slack.

Below is how I'm picturing the pieces fitting together, along with a few open questions / suggestions before we dive into implementation.

Slack App / Auth flow

- * We'll need a single Shapeshifter Slack App (likely one per Slack workspace a coach belongs to).

Scopes:

- -

channels:read,groups:read– list channels that the bot is a member of (for the dropdown). - -

chat:write– send messages. - - Potentially

channels:manageif we want to auto-join instead of asking the coach to /invite.

- -

- * Auth method: Do we already have OAuth set up for other Slack features? If not, we'll add the Slack "Add to Slack" button in Settings to capture a bot token for that workspace.

DB changes

- * Client model – add

slackChannelId(unique, nullable) and maybeslackChannelNamefor convenience. - * Organization (or a new table) – store the workspace's

accessToken& user-configurable notification toggles.

- * Client model – add

Coach Settings UI

- * New "Slack notifications" section (under Settings) visible once Slack is connected.

Switches for each notification type:

- - Thursday "targets not hit yet"

- - Sunday "execution < 30 %"

- - Instant "target achieved"

- - Instant "job compatibility > 90 %"

- * We can default them all to ON after connection and let the coach toggle off.

Client view – channel assignment

- * Add a Slack icon column.

- * Click shows a dropdown populated with

conversations.listresults (only channels the bot is already in). - * Saving writes the

slackChannelIdto the client; uniqueness constraint enforced in Prisma:@@unique([organizationId, slackChannelId]) - * Also allow "Unlink channel" from the dropdown.

Message delivery

- * Central Slack service utilising

@slack/web-api. Scheduled jobs (cron):

- - Thursday 8 AM coach's local time

- - Sunday 9 AM coach's local time

We can piggy-back on whatever scheduler we use for email digests (if any); otherwise a simple CRON in our worker queue.

Trigger-based hooks:

- * When Execution stats update (we already calculate remaining/achieved in the server code) → fire Slack message if thresholds crossed.

- * When a new job analysis finishes → fire Slack message if compatibility ≥ 0.9.

- * Central Slack service utilising

Failure handling / logging

- * Log Slack API errors (rate limits, revoked tokens,

unknown_channel, etc.) with Sentry tags. - * If a channel assignment no longer exists, notify the coach in-app and clear the assignment.

- * Log Slack API errors (rate limits, revoked tokens,

OPEN QUESTIONS / EDGE CASES

- * Multiple coaches (admins) in the same org – should each have independent Slack workspaces/tokens, or do we assume one workspace per org?

- * Time-zone source – use the organization's configured TZ or each client's? (We currently store timezone on Client, but weekly goals feel coach-driven, so org TZ seems fine.)

- * Wording approval – do you want the exact copy you provided hard-coded for now, or should we store message templates in DB for future editing?

- * Where in the Settings page would you like the "Connect Slack" CTA? Top-level tabs are already crowded; we can nest Slack inside "Notifications".

Let me know if any of the above doesn't align with your expectations or if we should adapt the scope (e.g. delay OAuth and ship with a manually pasted bot token to move faster). Once we settle the questions, I'll break this into concrete implementation tasks.

So, just write what you know. Choose the right kind of model for the right task, and you'll be ahead of three-quarters of the crowd already. I am not an expert but you can use any of the recent cheap models for relatively straightforward tasks and intelligent ones like this can be used for more complex tasks. If you hover over each model in Cursor's model dropdown, you will see such a short description of each of them.

PS: This is the start of a very long conversation I had for this feature. I sometimes find it really handy to ask o3 for suggestions and without bloating the context window, open a new tab and use something like a sonnet 4 to paste the suggestion into chat and use it for implementing the same, with optional additional context. This is incredibly useful as it doesn't let you bloat the context window for the main discussion, and gets the job done as well. After you implement the changes, you can (and probably should) confirm in the main chat by saying something like "I made some changes, is everything good? if yes, we can proceed to the next steps"

What I should have said

I briefly touched on the fact that since LLMs are too agreeable, and we often need to nudge them in a fashion which is not something that comes to us, when we talk to humans. This is hard to explain theoretically and won't make much sense unless I give you an example. There can be tons of these cases.

I remember long time back I asked to explain what a CMS is. I asked ChatGPT and it gave an answer, and since it conformed to my initial impressions, I followed up with, "So it's like an admin panel for non-developers" and to which it agreed and explained how it was a good analogy. Not saying it's wrong, but you can see the issue here.

LLMs err on agreeing if the response is remotely similar to what you are suggesting.

Knowing about LLMs what we know now, they won't disagree with you unless you're terribly wrong. So what I should have said would have been something like, "So how's it different from something like an admin-panel for non developers?"

This is more intentional. Before you ask questions like these, pause for a moment and try to predict the outcome. Hopefully this won't be a big problem in a few years as new models get more smart, but this is a good way to tackle it in day to day life.

F*** it I'll just do it myself

A very important thing that comes up almost regularly and needs to be addressed with AI assisted coding is how to handle poor outputs. If you're in the middle of a nice implementation but adjudicate that the result of one of your messages is either unsatisfactory, wrong, or just straight up trash; you need to revert those changes (using the revert icon on the bottom right on the textbox) and refine/split the question. This is to make sure that you don't lose important context of a specific chat, while progressing with the task.

Another case which is relatively trivial but worth talking about is handling issues in the ui. LLMs can really suck trying to implement a basic ui, sometimes they'll use native DOM elements instead of your component library unless you make them aware of it.

I personally try to feed screenshots of what went wrong as I (re)type what I want and if you have a designer in your team or with you, it'd help a lot to feed the screenshot of the design you need to the LLM. Reverting and feeding screenshots can go hand in hand and it can be tempting to give it a shot on your own sometimes, which you should.

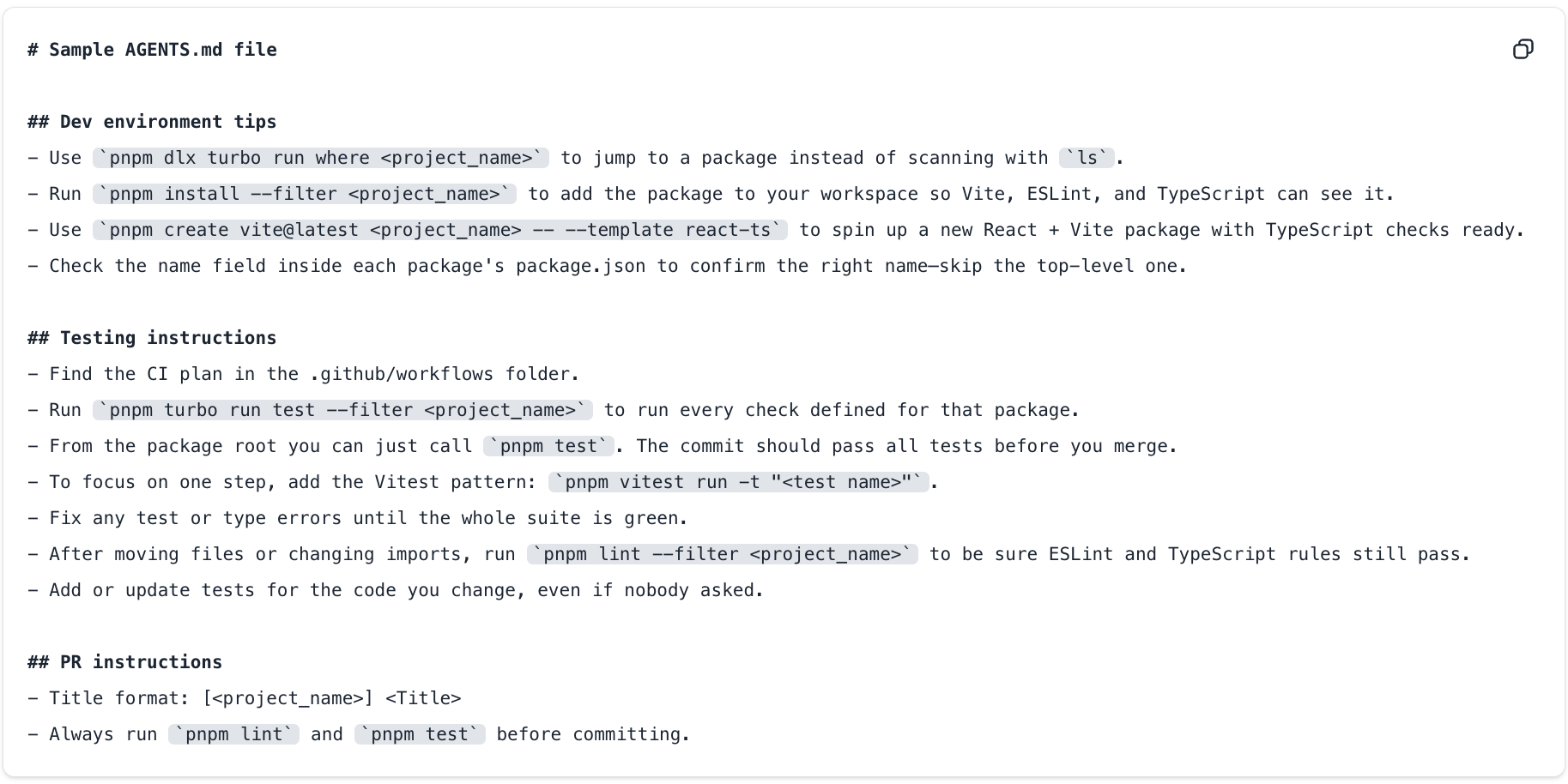

Agents.MD

Agents.mdis a powerful recent development.

In most IDEs, you can define some custom configuration in a special file that has to be called agents.md and you can add some common configuration which are commonly used in the process of implementing code. LLMs were bad at things like picking out the package manager instead of looking at the lockfile (which is futile to add to context, since it's too long and will bloat the context window) so you can specify those things there.

I haven't used it a lot, so I can't offer too much perspective on this. But it's really simple and powerful. Here's an example from their page

For more information, you can check out this article on how to write a great agents.md here

Common concerns

As I said earlier, if you were to take away one thing from this post, remember Half the battle's won if you just say what you need while passing the right files to the context. Remember context window is finite. And you don't even have to worry about passing in the files in many cases, as long as you don't think they would be necessary during implementation.

In case of extremely large files I'd recommend selecting the LoC and feeding it to the context there. I had a file (at work), which was ~6000 lines long, and I always made it a point to grab only the stuff I need from there into the LLM. Most AI IDEs' agents are powered with grep or semantic search and if you can nudge it what files to refer/look up to, even if you don't know where they are, it's more useful than you think. There's no need to waste time crafting the perfect prompt and certainly no need to do every feature in a single shot. In real codebases, that rarely happens, if at all, mostly if the tasks are small and straightforward.

In any codebase, you might have some makeshift stuff, or some stuff you plan on migrating, some code that doesn't look very organised or clean. It's best to make the LLM aware of certain things like that (which are relevant to the task you're performing), like something you'd let a junior engineer know when he's onboarded into a new codebase. In addition, if you handle some common functions differently, or in a more robust way, it's also important to let the LLM know, if you think those functions will come up at some point during the implementation. A good example of this is how you'd handle accessing local storage

In addition, please link any documentation or resource you'd find relevant to the task, if you want the LLM to enforce certain best practices. Link files and packages documentation which are relatively new or had a new release, and choose models by their strengths and from your experience using them. For me, o3 for discussions and the latest sonnet model for implementation.

There are some new packages that are being used regularly by nerds, even when they're in beta, and the way to get the most out of them is to point to the documentation of the related resource relevant to the task.

Cursor had a command we can use for adding all open filesto the context of the chat. But this is missing since the v2 release.

The End

Remember this is not something that comes naturally to most of us. You are figuring out the behaviour of something that is not deterministic; unlike learning mathematics, which has a fixed answer. If you pass in the exact prompt to the LLM several times, you will see different outputs each time. I'm sure we all stumbled with getting AI to work because it got too agreeable. That can be seriously counter productive to the point of being infuriating.

It's worth remembering that they are pretty dumb, and will continue to be. When an LLM receives a prompt, its primary task is to calculate the probability distribution over all possible words in its vocabulary that could come next. An LLM doesn't genuinely understand the meaning behind the words it processes or generates. It doesn't have beliefs, desires, or lived experiences that give rise to meaning for humans. It's operating on statistical correlations of symbols, not on an internal model of the world or a subjective experience. It doesn't intend to solve a problem; it simply executes its probabilistic function. What LLMs do very well is simulate intelligence. They can produce outputs that appear intelligent, creative, or insightful because they have learned incredibly complex statistical patterns from human-generated data. As a result, it has been observed to perform exceptionally well within well defined systems and rules like coding or chess, where we see it defeating some grandmasters. But it's markedly worse in systems that are a little more open.

LLMs are (by design) biased towards the next most probable word/concept. It's entire existence depends on reinforcing patterns seen in its training data. Truly revolutionary ideas that cause real paradigm shifts and are unconventional is something where only humans can shine, from our inception to the end of our time. This is not a criticism of LLMs.

Renowned linguist Noam Chomsky talks aboutscientists like Max Tegmark, who outlined the three levels of intelligence, where he presents an argument as to how a massive system could, say, run through all the scientific discoveries in history, maybe find out some better way to design it and design something by itself which could "destroy us all"; maybe in theory, but it's so remotely far from anything that's out there that I'm not sure it's in our best interests to be even remotely worried about any of it. I personally don't think this is possible with LLMs at all.

Even Yann LeCun has been blunt about this, calling next-word prediction a dead end for building real intelligence. (For those who don’t know—LeCun is one of the “godfathers of AI.” Not a marketing title. The man literally won the Turing Award (basically the Nobel Prize of computer science) for helping invent deep learning. He’s been at Meta for over a decade as their Chief AI Scientist. An absolute legend. Of course that doesn't make him right about everything but it's worth listening to him because in these matters, his words have weight.) You can read his recent take here.

I mean, in my own experience, they can barely get an svg right.

So, take one step at a time, don't dismiss the value of Artificial Intelligence and also don't think it's going to revolutionise the world for the better (it's certainly not being used in those directions; I can guarantee you that)

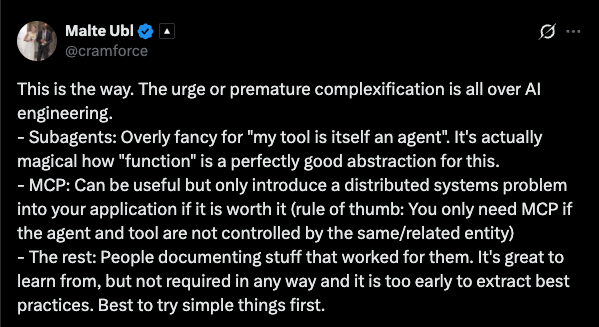

Malte Ubl, CTO of Vercel, in his last line sums it up well: